Introduction

Generative AI, like ChatGPT, is pretty cool. It can talk like a human and understand complex stuff. But here’s the thing: LLM are trained with large public data. If you want to harvest full benefits of LLM then models need to be trained with company domain specific data.

So, how can businesses use generative AI without giving away their secrets? Well, there are three main ways. In this blog, we’ll break them down for you. We’ll talk about what’s good, what’s not so good, and how each way can help businesses get the most out of AI while keeping their secrets safe. Let’s dive in!

But First let us explore the 3 LLM data training approaches.

3 LLM Data Training Approaches

1) Training from scratch – Building Your Own LLM

- Imagine constructing a brand new LLM from the ground up. This approach involves feeding the model massive amounts of data (think billions of words) to help it learn language patterns and relationships.

- Pros: Perfect for organizations with vast resources and unique needs, allowing for complete customization.

- Cons: Requires a massive dataset, significant computational power, and deep data science expertise. Not ideal for everyone!

Example

GPT-3, a large language model, was trained from scratch on a huge amount of diverse text data from the internet, including websites, books, and other public sources. OpenAI’s proprietary AI Platform was used to gather and clean this data. With 175 billion parameters, the training process was extremely costly, potentially running into tens of millions of dollars for hardware and electricity alone.

2) Fine-Tuning: Adapting a Pre-Trained LLM to Your Domain

- Think of fine-tuning as taking a pre-trained LLM (like a pre-built house) and remodeling it for your specific needs. You’ll use data relevant to your domain to adjust the model’s behavior.

- Pros: Ideal for leveraging existing models for specific tasks. Requires moderate amounts of data compared to training from scratch.

- Cons: Expertise needed for data preparation and the fine-tuning process itself. May not be the best fit for entirely new content areas.

Example

The Central Statistics Office (CSO) Ireland needed to switch from SAS (Statistical Analysis System) to R as its main programming language, this required translation of old SAS code into similar R code, their data science team started exploring LLMs for simpler conversion and eventually chose OpenAI.

They fine-tuned particular models to improve the caliber of translation and noticed visible improvements in the accuracy and reliability of translations.

3) Prompt-Tuning: The Art of Guiding Your LLM with Crafty Prompts

- Imagine giving your LLM clear instructions (prompts) to achieve the desired outcome. This approach involves crafting specific prompts that guide the pre-trained LLM’s output towards your needs.

- Pros: The most common approach for those starting with pre-trained models and limited data. Requires less data preparation compared to other methods.

- Cons: Crafting effective prompts is crucial, and the level of customization may be lower compared to other methods.

Below are 3 comparison factors that will help you to decide the best approach for your company.

Example

The IMF (International Monetary Fund) updated its statistics processing and distribution platforms to align with modern best practices, such as search and browsing capabilities. A prototype called StatGPT was developed, using Generative AI and prompt-tuning to help users access data on the distribution platform.

ChatGPT’s main job is to understand and extract necessary parameters from a natural language prompt, use this information to build query parameters, and perform a data query against an API that returns statistical data.

3 Key Factors To check Before Choosing Right Approach

Factor 1: Suitability

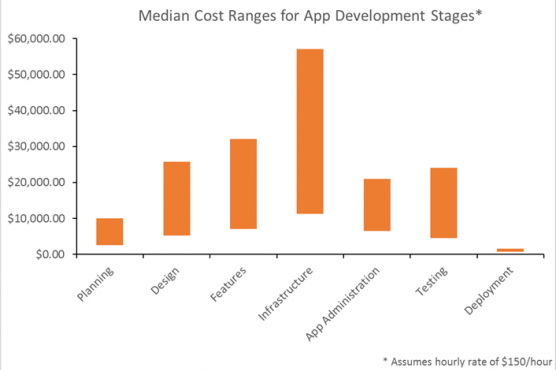

Factor 2: Cost & Timeline

Factor 3: Data Requirement & Data Science Expertise

Considerations

Think of LLMs as super-powered assistants that can learn and adapt to your specific needs. Just like ChatGPT, they can handle tasks like writing various kinds of content, translating languages, and even generating creative text formats.

- Security: Sending an organization’s sensitive data to Cloud may not be an option for many organizations. This is where on-premises LLMs emerge as a compelling alternative. On-premises LLMs allow you to train your own model entirely within your organization’s data/infrastructure.

- Risk Tolerance: Does your organization have risk tolerance for that? Do you prioritize a safe but potentially less customizable option? Pre-trained models with fine-tuning offer this. Conversely, are you willing to take on more risk for maximum control? Building a custom LLM allows this but demands significant expertise and resources.

- Maturity: Evaluate your team’s technical capabilities – can you handle in-house development or is outsourcing necessary? Think about future growth and how your chosen approach can scale to handle more data or complex tasks.

- Ethical: Ensure your LLM is trained on unbiased data and develop strategies to mitigate potential biases in its outputs.

By carefully considering these factors, you can make an informed decision about the LLM approach that best aligns with your project’s needs and your risk tolerance.

Taking the First Step:

Feeling overwhelmed? Don’t worry! Contact Us and discover how LLMs can propel your business forward. Also Get more details about AI/ML services

You might also like

Stay ahead in tech with Sunflower Lab’s curated blogs, sorted by technology type. From AI to Digital Products, explore cutting-edge developments in our insightful, categorized collection. Dive in and stay informed about the ever-evolving digital landscape with Sunflower Lab.