What is the difference between LLM and SLM?

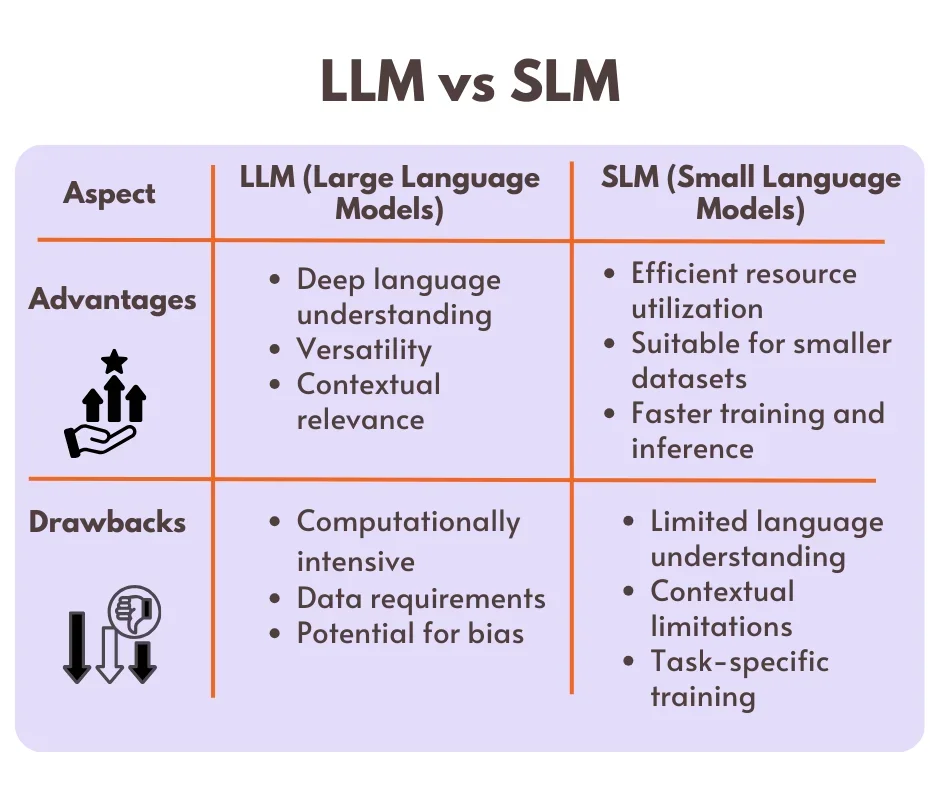

The primary and significant distinction between a large language model and a small language model lies in their capacity, performance, and the volume of data used for training. Large language models, like GPT-3, are constructed on extensive datasets and possess many parameters, enabling them to comprehend and generate text that resembles human language with remarkable accuracy and coherence. Conversely, small language models have fewer parameters and are trained on more limited datasets, which can constrain their ability to grasp and generate intricate language patterns.

Advanced language models excel at capturing and generating a vast array of linguistic nuances, context, and semantics. This makes them highly suitable for a multitude of natural language processing tasks, including language translation, text summarization, and question-answering systems. On the other hand, while smaller language models may have less power, they can still prove effective for simpler language processing tasks and applications that have limited processing resources available.

To sum it up, in generative AI the key distinction between large and small language models lies in their scale. Large models, with their extensive training data and parameters, offer greater power and versatility, while small models are more limited in capabilities due to resource constraints. When comparing the two, it’s crucial to consider factors like data requirements and modality performance.

The overall artificial intelligence market, which includes LLMs and SLMs, is forecasted to be worth $909 billion by 2030, growing at a Compound Annual Growth Rate (CAGR) of 35% (Source-Verdict UK)

Data Requirements

Data requirements are crucial when it comes to training a language model. The amount and quality of data play a significant role in determining the model’s performance. Larger language models require extensive amounts of high-quality data to achieve optimal performance.

On the other hand, smaller language models can be trained on smaller datasets. This is because larger models, with their increased complexity and parameters, need more data to effectively learn and generalize.

Modality Performance

The performance of a language model in different modalities, such as speech, images, and video, is crucial. Large language models, with extensive training data and parameters, generally excel in handling diverse modalities. On the other hand, smaller language models may face challenges when dealing with more complex modalities.

When it comes to training your own language models, large language models often require external resources.

Tools for Training Advanced Large Language Models

1) OpenAI's GPT-3

OpenAI provides access to the GPT-3 model and its API, allowing developers to leverage this powerful LLM for various natural language processing tasks. OpenAI also offers documentation and resources for understanding and utilizing GPT-3.

2) Hugging Face's Transformers Library

Hugging Face‘s library provides a wide range of pre-trained LLMs, including GPT-2 and GPT-3, along with tools for fine-tuning these models on custom datasets. The library offers extensive documentation, tutorials, and community support for training and using LLMs.

3) Cloud-based AI Platforms

Cloud providers such as Google Cloud AI, Amazon Web Services (AWS), and Microsoft Azure offer services for training and deploying custom LLMs. These platforms provide the infrastructure and tools necessary for training large-scale language models, along with support for managing and scaling the training process.

Small Language Models: Essential Resources for Effective Training

1) Hugging Face's Transformers Library

In addition to LLMs, Hugging Face’s Transformers library offers a variety of pre-trained SLMs, such as BERT and RoBERTa, along with resources for fine-tuning these models on specific tasks. The library’s documentation and community support make it a valuable resource for training custom SLMs.

2) Transfer Learning Toolkit

NVIDIA’s Transfer Learning Toolkit provides a comprehensive set of tools and pre-trained models for training custom SLMs. The toolkit is designed to streamline the process of fine-tuning and deploying SLMs on NVIDIA GPU-accelerated systems.

3) Open-Source Frameworks

Open-source frameworks like PyTorch and TensorFlow offer a wealth of resources for training custom SLMs, including pre-trained models, tutorials, and community forums for sharing knowledge and best practices.

Innovating Chatbot App Developement

Our Experts Are Always Ready to Give You The Best & Foremost AI Services

When should you use one over the other?

- Utilize Language Models (LLMs) for tasks demanding a profound grasp of natural language and the creation of text resembling human expression. These tasks encompass language translation, text summarization, and content generation.

- LLMs are also highly beneficial for tasks that require answering open-ended questions and for conversational agents, as they can produce responses that are contextually relevant and coherent.

- SLMs are ideal for tasks that demand a more structured comprehension of language, like sentiment analysis, named entity recognition, and text classification. Their usage enables precise understanding and effective processing of textual data.

- SLMs are highly effective in scenarios where the primary objective is to extract specific information or identify patterns within the text. Their application proves invaluable when precision and discernment are paramount.

- SLMs prove invaluable in tasks that require deciphering the connections among various textual elements, such as discerning the sentiment of a sentence or categorizing the subject matter of a document.

To effectively decide between an LLM or an SLM for a given NLP task, it is crucial to comprehend the specific requirements. Each model type possesses its unique strengths and limitations, making this understanding essential.

Choosing the Right Model

The best choice really depends on what you specifically need and the context you’re in.

Consider the following factors when choosing between an SLM and an LLM:

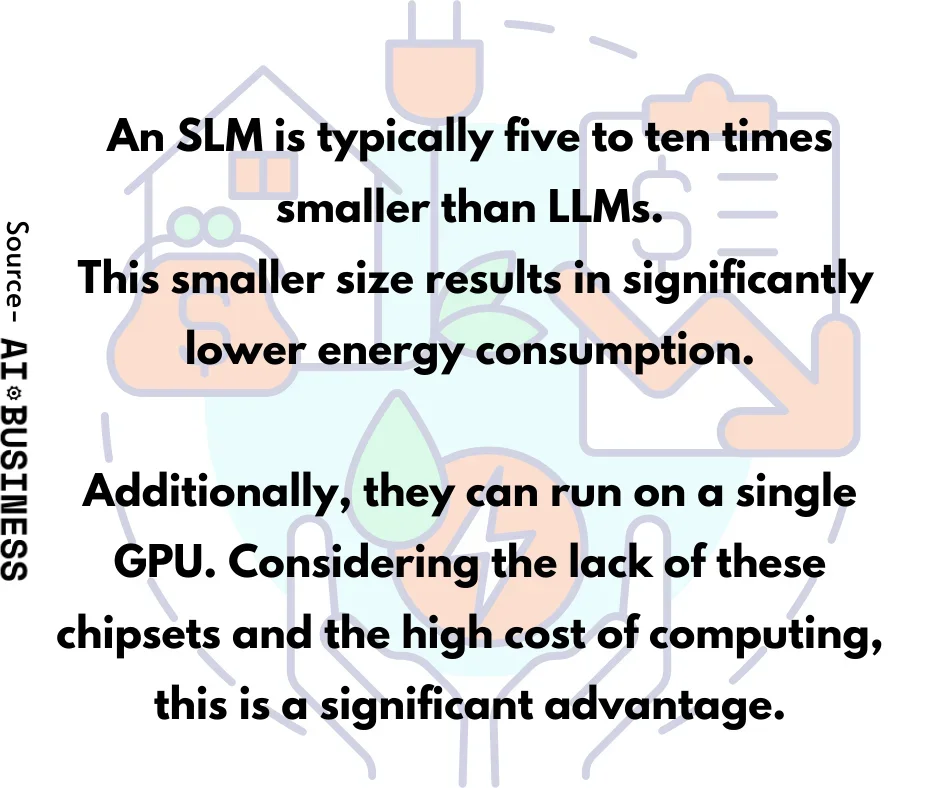

- Resource constraints – If you have limited computational power or memory, an SLM is the obvious choice.

- Task complexity – For highly complex tasks, an LLM might be necessary to ensure optimal performance.

- Domain specificity – If your task is specific to a particular domain, you can fine-tune either model on relevant data. However, SLMs may hold an advantage in this regard.

- Interpretability – If understanding the model’s reasoning is vital, an SLM would be the preferred option.

By weighing these considerations, you can make an informed decision on which model best suits your needs.

Fine-Tuning Capabilities

Fine-tuning in machine learning refers to the process of training a pre-existing, often expansive and versatile model on a specific task or dataset. This enables the model to adapt its acquired knowledge to a particular domain or set of tasks. The concept behind fine-tuning is to harness the insights gained by the model during its initial training on a vast and varied dataset, and subsequently tailor it for a more focused and specialized application.

Fine-Tuning of LLMs

LLMs like GPT-3 or BERT can be fine-tuned using task-specific data, enhancing their ability to generate precise and relevant text in context. This approach is crucial because training a large language model from scratch is extremely costly in terms of computational resources and time.

By leveraging the knowledge already captured in pre-trained models, we can achieve high performance on specific tasks with significantly less data and computing. Fine-tuning plays a vital role in machine learning when we need to adapt an existing model to a specific task or domain.

Here are some important moments that require your attention. Make sure not to overlook these key scenarios:

- Transfer Learning – Fine-tuning plays a critical role in transfer learning, allowing the knowledge of a pre-trained model to be applied to a new task. By starting with a pre-trained model and refining it for a specific task, the training process is expedited, and the model can effectively leverage its general language understanding for the new task. This approach not only saves time but also enables the model to harness its expertise in delivering high-quality, customized software solutions.

- Limited Data Availability– Fine-tuning proves especially advantageous when working with limited labeled data for a specific task. Rather than starting from scratch, you can harness the knowledge of a pre-trained model and adapt it to your task using a smaller dataset.

Fine-Tuning of SLMs

SLMs can also be fine-tuned to enhance their performance. Fine-tuning involves exposing an SLM to specialized training data and tailoring its capabilities to a specific domain or task. This process, akin to sharpening a skill, enhances the SLM’s ability to produce accurate, relevant, and high-quality outputs.

Recent studies have demonstrated that smaller language models can be fine-tuned to achieve competitive or even superior performance compared to their larger counterparts in specific tasks. This makes SLMs a cost-effective and efficient choice for many applications.

Thus, we can agree that both LLMs and SLMs have robust fine-tuning capabilities that allow them to be tailored to specific tasks or domains, thereby enhancing their performance and utility in various applications.

Unleashing Potential of LLMs & SLMs Across Industries

How are Companies Using LLMs?

- Industry: E-commerce Platform

- Use Case: Customer Support Chatbot

In this scenario, an e-commerce platform leverages an LLM to empower a customer support chatbot. The LLM is trained to comprehend and generate human-like responses to customer inquiries. This enables the chatbot to deliver personalized and contextually relevant assistance, including addressing product-related queries, aiding with order tracking, and handling general inquiries. The deep language understanding and contextual relevance of the LLM elevate the customer support experience, leading to enhanced satisfaction and operational efficiency.

SLMs in Action

- Industry: Financial Services Firm

- Use Case: Sentiment Analysis for Customer Feedback

In this case, a financial services firm utilizes an SLM for sentiment analysis of customer feedback. The SLM is trained to categorize customer reviews, emails, and social media comments into positive, negative, or neutral sentiments. By leveraging SLM’s powerful language analysis capabilities, the firm gains valuable insights into customer satisfaction, identifies areas for improvement, and makes data-driven decisions to enhance their products and services. The SLM’s efficiency in handling structured language tasks allows the firm to process and analyze large volumes of customer feedback effectively.

Final Thoughts

In conclusion, LLMs and SLMs both offer robust fine-tuning capabilities that allow for customization of models to specific tasks or domains. This flexibility enhances their performance and utility in various industries and applications. From optimizing customer support experiences to improving data-driven decision making, LLMs and SLMs have the potential to revolutionize many industries and drive innovation.

Our AI/ML Development Services continue leveraging both LLMs and SLMs to tailor solutions that are as diverse and dynamic as your business needs them to be. With a customer-centric approach, we ensure that your digital infrastructure is not only built on the leading edge of innovation but also uniquely yours. Contact Our AI/ML Experts Today.

Drive Success with Our Tech Expertise

Unlock the potential of your business with our range of tech solutions. From RPA to data analytics and AI/ML services, we offer tailored expertise to drive success. Explore innovation, optimize efficiency, and shape the future of your business. Connect with us today and take the first step towards transformative growth.

You might also like

Stay ahead in tech with Sunflower Lab’s curated blogs, sorted by technology type. From AI to Digital Products, explore cutting-edge developments in our insightful, categorized collection. Dive in and stay informed about the ever-evolving digital landscape with Sunflower Lab.